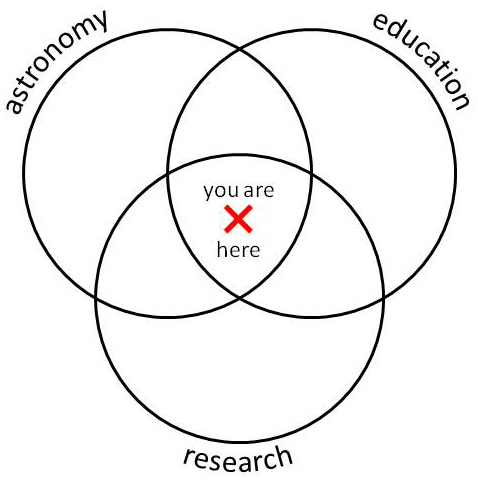

The course transformations I work on through the Carl Wieman Science Education Initiative (CWSEI) in Physics and Astronomy at UBC are based on a 3-pillared approach:

- figure out what students should learn (by writing learning goals)

- teach those concepts with research-based instructional strategies

- assess if they learned 1. via 2.

Now that we’ve reached the end of the term, I’m working on Step 3. I’m mimicking the assessment described by Prather, Rudolf, Brissenden and Schlingman, “A national study assessing the teaching and learning of introductory astronomy. Part I. The effect of interactive instruction,” Am. J. Phys. 77(4), 320-330 (2009) [link to PDF]. They looked for a relationship between the normalized learning gain on a particular assessment tool, the Light and Spectroscopy Concept Inventory [PDF], and the fraction of class time spent on interactive, learner-centered activities. They collected data from 52 classes at 31 institutions across the U.S.

The result is not a clear, more interaction = higher learning gain, as one might naively expect. It’s a bit more subtle:

The key finding is this: In order to get learning gains above 0.30 (which means that over the course of the term, the students learn 30% of the material they didn’t know coming in) — and 0.30 is not a bad target — classes must be at least 0.25 or 25% interactive. In other words, if your class is less than 25% interactive, you are unlikely to get learning gains (yes, as measured by this particular tool) above 30%.

Notice it does not say that highly interactive classes guarantee learning — there are plenty of highly-interactive classes with low learning gain.

Back in September, I started recording how much time we spent on interactive instruction in our course, ASTR 311. Between think-pair-share clicker questions, Lecture-tutorial worksheets and other types of worksheets, we spent about 35% of total class time on interactive activities.

We ran the LSCI as a pre-test in early September, long before we’d talked about light and spectroscopy, and again as a post-test at the end of October, after the students had seen the material in class and in a 1-hour hand-on spectroscopy lab. The learning gain across 94 matched pairs of tests (that is, using the pre- and post-test scores only for students who wrote both tests) came out to 0.42. Together, these statistics put our class nicely in the upper end of the study. They certainly support the 0.30/25% result.

Cool.

Okay, so they learned something. How come?

The next step is to compare student performance before and after this term’s course transformation. We don’t have LSCI data from previous years, but we do have old exams. On this term’s final exam, we purposely re-used a number of questions from the pre-transformation exam. I just need to collect some data – which means re-marking last year’s final exam using this year’s marking scheme. Ugh. That’ s the subject of a future post…